This article was developed with the intention to help IBM MQ Administrators gain a better understanding of Dead Letter Queues (DLQ) and DLQ Handlers for RTP administration. It provides basic scenarios and explanations within IBM MQ.

Becoming a Real Time Payments (RTP) “participant” has numerous challenges. For many financial entities, this is their first exposure to IBM MQ as a messaging system, which is a requirement to join the network.

The Clearing House’s RTP represents the next evolution of payments innovation for instant transfer of funds and confirmation. Financial institutions, big and small, are offering Real Time Payments to their customers to stay competitive.

IBM MQ and RTP

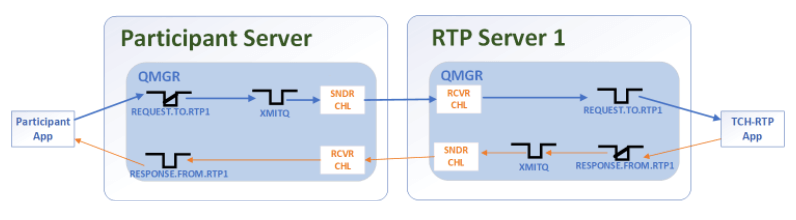

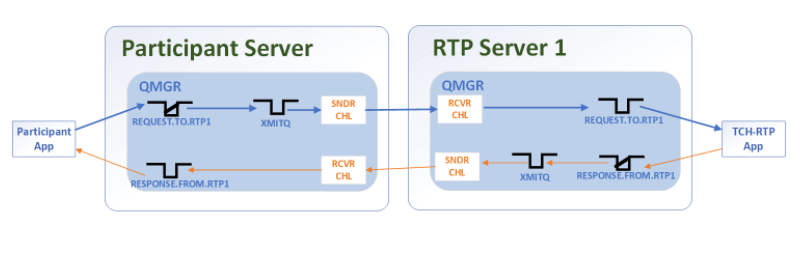

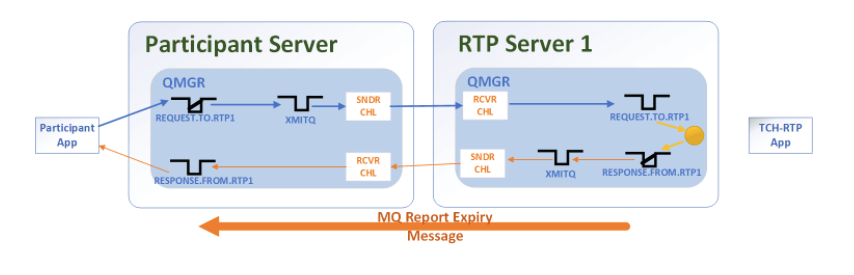

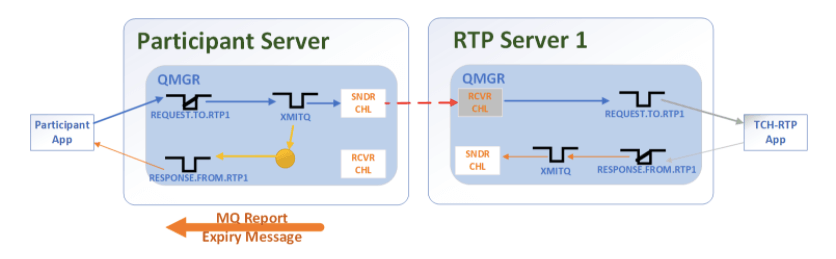

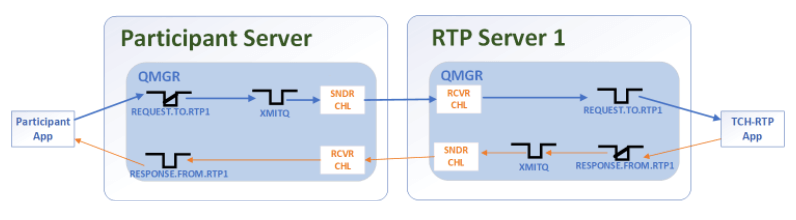

At its fundamentals, the RTP network was built upon the concept of MQ request/reply. For example, a participant sends a request message following the ISO 20022 XML standard.

The overall lifespan of the “transaction” is fifteen (15) seconds, but the “hops” between the participant to RTP to participant and back are two (2) seconds each between its respective queue manager. The Clearing House’s RTP documentation provides a chart to explain further, and is outside the narrative of this article.

When everything works through “happy path”, it’ll be as shown above: the participant app sends a request message and awaits a response within the 15 second window.

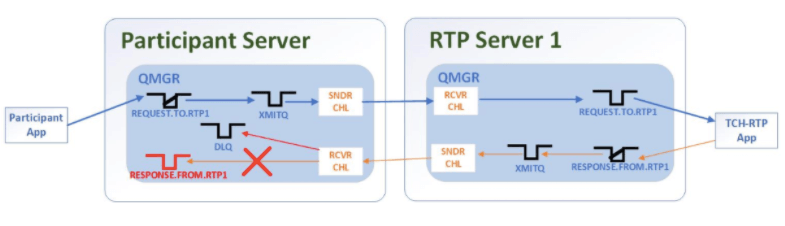

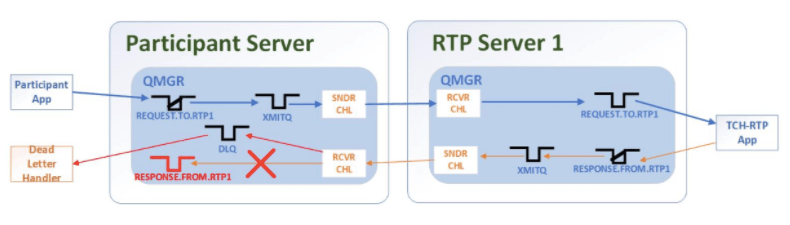

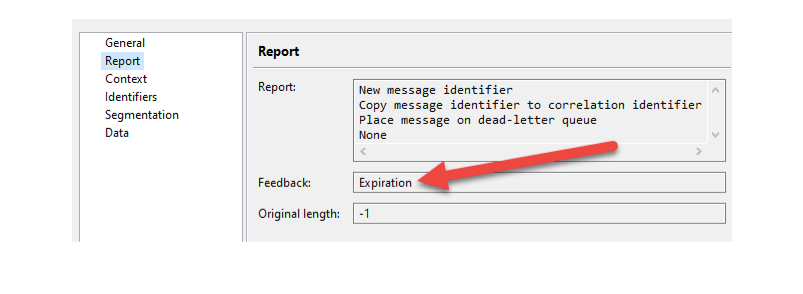

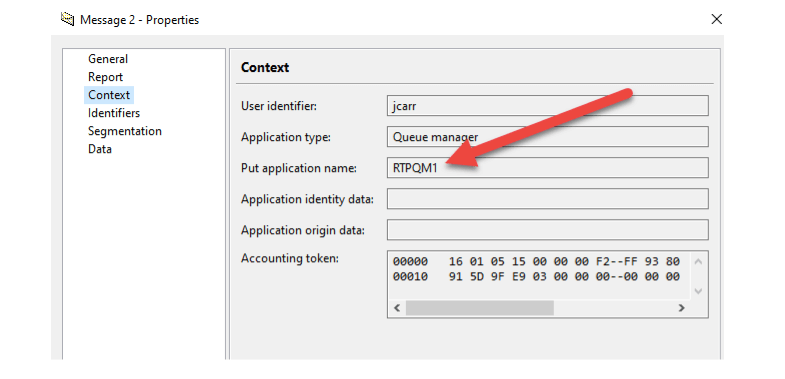

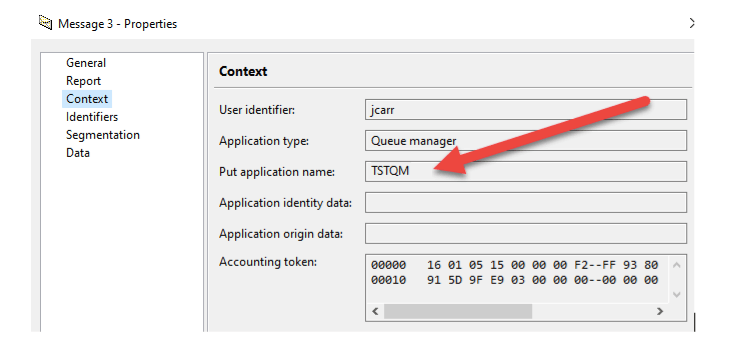

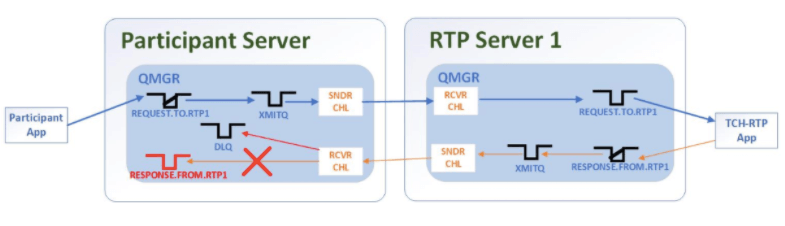

But what happens if something goes wrong with the reply message being placed on RESPONSE.FROM.RTP1?

THE DEAD LETTER QUEUE AND THE DEAD LETTER HANDLER

A feature of IBM MQ is the assured delivery of the messages to the destination. There can be number of scenarios where MQ may not be able to deliver messages to the destination queue, and all those are routed to the Dead Letter Queue (DLQ) defined for the queue manager.

Before placing message to the DLQ, IBM MQ attaches Dead Letter Header (DLH) to each message which contains all the required information to identify why the message was placed in DLQ and what was the intended destination. This DLH plays a very important role while handling the messages on DLQ.

A feature of IBM MQ is the assured delivery of the messages to the destination. There can be number of scenarios where MQ may not be able to deliver messages to the destination queue, and all those are routed to the Dead Letter Queue (DLQ) defined for the queue manager.

Before placing message to the DLQ, IBM MQ attaches Dead Letter Header (DLH) to each message which contains all the required information to identify why the message was placed in DLQ and what was the intended destination. This DLH plays a very important role while handling the messages on DLQ.

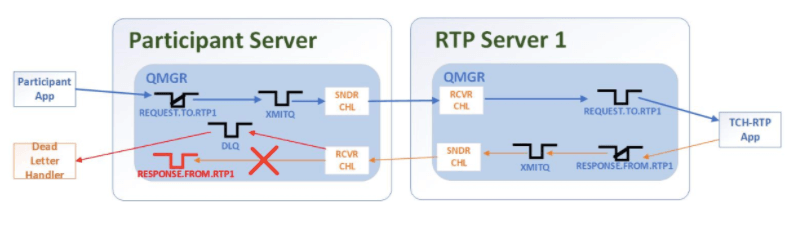

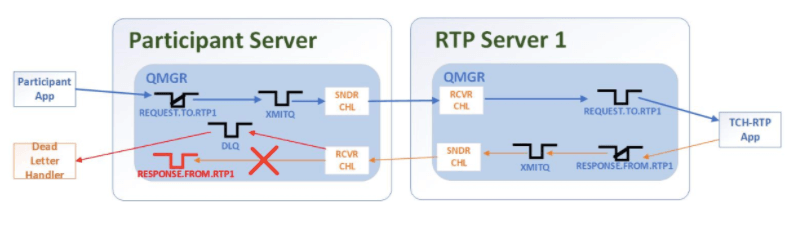

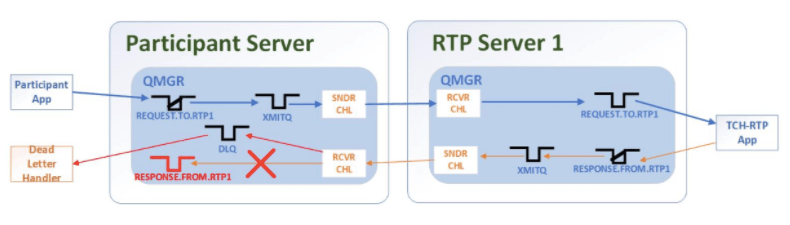

IBM has provided a Dead Letter Handler utility called “runmqdlq”. Its purpose is to handle messages which are placed on the DLQ. With it, the routine takes necessary action on the messages placed on the DLQ. A rule table can be created with required control data and rules and the same can be used as an input to the Dead Letter Handler.

TYPES OF DEAD LETTER MESSAGES

In a nutshell, messages placed on the DLQ are in two categories:

– Replayable Messages

– Non-RePlayable Messages

For Replayable Messages, these are placed on the DLQ due to some temporary issues like the destination queue is full or the destination queue is put disabled etc. These messages can be replayed without any further investigation. Also, re-playable messages can be performed by using dlqhandler.

For Non-Replayable Messages, these are placed on the DLQ due to issues which are not temporary in nature (incorrect destination, data conversion errors, mqrc_unknown_reason). These messages require further investigation to identify the cause on why those were placed on the DLQ. Replaying this category of messages with dlqhandler most likely will again arrive on DLQ. Therefore, they would require an MQ Admin to investigate further.

THE DLQ HANDLER SETUP

Replayable messages can be run again (reprocessed) by using the dlqhandler. In short, the dlqhandler is setup as follows:

1) Creating the dlqhandler rule file

2) Starting the dlqhandler

The rule file is a simple text-based flat file with configuration instructions as to how a message should be handled.

COMMON SCENARIOS

A number of scenarios are possible, based on the design of MQ infrastructure at an organization, but the most common scenarios are:

- SINGLE-PURPOSE: Replayable messages are “replayed” to their original queue and non-replayable messages are placed on a single designated queue for an MQ admin to investigate

- MULTI-PURPOSE: Replayable messages are “replayed” to their original queue and non-replayable messages are placed on a designated queue for each application for an MQ admin to investigate

- HYBRID-PURPOSE: Replayable and non-replayable messages from specific queues are placed on a designated queue for each application for an MQ admin to investigate, while other replayable messages are “replayed” to their original queue, and non-replayable messages are placed on a single designated queue for an MQ admin to investigate.

It is worth noting that since messages being put to the DLQ because of their destination queue being full (MQRC_Q_FULL) or put inhibited (MQRC_PUT_INHIBITIED) will be in constant retry mode. That said, it’s important to have a monitoring and alerting setup on a queue manager and associated mq objects to send an alert (email, page, etc.) for someone to investigate why a queue is approaching 100% depth or put inhibited.

SCENARIO 1: SINGLE PURPOSE

This is a very common requirement. This can be achieved by defining the rule file.

Contents of <scenario1.rul> file:

******=====Retry every 30 seconds, and WAIT for messages =======*****

RETRYINT(30) WAIT(YES)

***** For reason queue full and put disabled *******

**** retry continuously to put the message on the original queue ****

REASON(MQRC_Q_FULL) ACTION(RETRY) RETRY(999999999)

REASON(MQRC_PUT_INHIBITED) ACTION(RETRY) RETRY(999999999)

**** For all other dlq messages, move those to designated queue *****

ACTION(FWD) FWDQ(UNPLAYABLE.DLQ) HEADER(YES)

******=========================================================*******

SCENARIO 2: MULTI PURPOSE

Suppose there are 3 applications using the qmgr: APP1, APP2 and APP3. The following are the queues for these applications and dedicated queue to hold dlq messages for each application:

APP1 –

APP1.QL

DLQ – APP1.ERR.DLQ

APP2 –

APP2.QL

DLQ – APP2.ERR.DLQ

APP3 –

APP3.QL

DLQ – APP3.ERR.DLQ

We now have to write rules based on the DESTQ for each application. Below the example rule file for this scenario.

Contents of <scenario2.rul> file:

******=====Retry every 30 seconds, and WAIT for messages =======*****

RETRYINT(30) WAIT(YES)

***** For reason queue full and put disabled *******

**** retry continuously to put the message on the original queue ****

REASON(MQRC_Q_FULL) ACTION(RETRY) RETRY(999999999)

REASON(MQRC_PUT_INHIBITED) ACTION(RETRY) RETRY(999999999)

******* For APP1, forward messages to APP1.ERR.DLQ ******

DESTQ(APP1.QL) ACTION(FWD) FWDQ(APP1.ERR.DLQ) HEADER(YES)

******* For APP2, forward messages to APP2.ERR.DLQ ******

DESTQ(APP2.QL) ACTION(FWD) FWDQ(APP2.ERR.DLQ) HEADER(YES)

******* For APP3, forward messages to APP3.ERR.DLQ ******

DESTQ(APP3.QL) ACTION(FWD) FWDQ(APP3.ERR.DLQ) HEADER(YES)

**** For all other dlq messages, move those to designated queue *****

ACTION(FWD) FWDQ(GENEAL.ERR.DLQ) HEADER(YES)

*********=========================================================******

SCENARIO 3: HYBRID PURPOSE

This is an example of regardless as to why the message was put on the DLQ for APP1.QL, the message needs to be forwarded to APP1.ERR.DLQ.

For other non-APP1 DLQ messages, attempt to replay them to their intended destination queue for queue full and queue inhibited messages.

If the retry interval is > 10 or it’s a non-replayable message, forward that message to the UNPLAYABLE.DLQ.

Contents of <scenario3.rul> file

******=====Retry every 30 seconds, and WAIT for messages =======*****

RETRYINT(30) WAIT(YES)

******* For APP1, forward messages to APP1.ERR.DLQ ******

DESTQ(APP1.QL) ACTION(FWD) FWDQ(APP1.ERR.DLQ) HEADER(YES)

***** For reason queue full and put disabled *******

**** retry 10 times to put the message on the original queue ****

REASON(MQRC_Q_FULL) ACTION(RETRY) RETRY(10)

REASON(MQRC_PUT_INHIBITED) ACTION(RETRY) RETRY(10)

**** For all other dlq messages, move those to designated queue *****

ACTION(FWD) FWDQ(UNPLAYABLE.DLQ) HEADER(YES)

******=========================================================*******

STARTING THE DLQHANDLER

Once the rule file is created, you can configure dlqhandler startup in the following ways:

1) Manually starting the dlqhandler and keep it running.

runmqdlq QMGR_DLQ QMGR_NAME < qrulefile.rul

2) Configure dlqhandler as a service in the queue manager

SPECIAL NOTE FOR WINDOWS SERVERS:

DEFINE SERVICE(dlqhandler) +

SERVTYPE(SERVER) +

CONTROL(MANUAL) +

STARTCMD(‘c:\var\bin\mqdlq.bat’) +

DESCR(‘dlqhandler service’) +

STARTARG(‘DLQ QMNAME C:\var\rulefiles\qrulefile.rul’) +

STOPCMD(‘c:\var\bin\stopdlh.bat’) +

STOPARG(‘DLQ QMNAME’) +

STDOUT(‘/path/dlq.log’) +

STDERR(‘/path/dlq_error.log’)

NOTE: For Windows, because of the nature of how it doesn’t handle redirects “<” like Linux does, a wrapper script must be written.

Contents of mqdlq.bat (start command):

echo alt ql(%1) get(enabled) | runmqsc %2

runmqdlq.exe %1 %2 %3

Contents of stopldh.bat (stop command):

echo alt ql(%1) get(disabled) | runmqsc %2

Enabling and disabling the DLQ essentially kills the dead letter handler.

3) Configure triggering at DLQ to start dlq handler whenever first message arrives on the queue.

You can do it by simply following the steps on how to configure triggering on any queue. Please refer the following link for more information on triggering.

Once you setup triggering, dlqhandler will be started by triggering based on the condition that you set. You don’t need to have dlqhandler running all the time as it can be started again by triggering. Due to above reason, you don’t need WAIT(YES) in the rule file, you can change it to WAIT(NO).

WANT TO LEARN MORE?

TxMQ delivers an RTP Starter Pack to accelerate participant onboarding. You can learn more about our starter pack here: https://www.txmq.com/RTP/

Our deep industry experience and subject matter experts are available to solve your most complex challenges. We deliver solutions and innovations to do business in an ever-changing world, to guide & support your organization through the digital transformation journey.

TxMQ

Imagine. Transform. Engage.

We’re here to help you work smart in the new economy.

This post was authored by John Carr, Principle Integration Architect at TxMQ. You can follow John on LinkedIn here.